Technology: Google's artificial intelligence deep dissection

The era of artificial intelligence

technology has arrived - Google is based on AI, integrates it into life, and

makes the impossible possible

As early as HAL9000 in Stanley Kubrick's

"2001: A Space Odyssey" in 1968, to R2-D2 in "Star Wars" in

1977, to David in "AI" in 2001, to the recent "Star Wars: The

Force Awakens BB-8, the countless movie robots, rely on the forward-looking

creations of Hollywood visionaries to bring us closer to artificial

intelligence.

From AlphaGo and Lee Sedol at the game of

Go to various smart products, including Google Home, Google Assistant, and

cloud computing hardware, Google has officially established a corporate

strategy that prioritizes artificial intelligence. AI business covers

everything from hardware to software, search algorithms, translation, speech

and image recognition, unmanned vehicle technology, and medical and pharmaceutical

research.

The ultimate goal of AI is to imitate the

operation of the brain, and the GPU promotes the popularization of AI, but

three major problems still need to be solved

The ultimate goal of artificial

intelligence is to imitate the thinking and operation of the human brain, but

now the more mature supervised learning (Supervised Learning) does not follow

this model. But in the end, unsupervised learning is the most natural way for

the human brain to learn.

We believe that in the past 5-10 years,

artificial intelligence has been commercialized and popularized mainly due to

the rapid increase in computing power: 1) the breakthrough of Moore's Law,

which has accelerated the decline in hardware prices; 2) the popularity of

cloud computing, And 3) the use of GPUs has improved multi-dimensional

computing capabilities, which has greatly promoted the commercialization of AI.

There are three major problems in machine learning:

- Need to rely on a large

amount of data and samples to train and learn;

- Learning in a specific

sector and domain (domain and context-specific);

- It is necessary to

manually select the data expression method and learning algorithm to

achieve optimal learning.

The market value of Google is seriously

underestimated, and the rise of the lunar exploration business will usher in a

new golden decade

The core of the company's profitability is

the artificial intelligence-driven search and advertising business. Although

the advertising business still accounts for 90% of revenue, with the rise of

the Other Bets business in 3-5 years, Google will usher in a new golden decade.

Now the market has been comparing Facebook and Google. Google's 2017 PE was

19x, compared to FB's 22x, we think Google is seriously undervalued. In

Google's advertising business, the increase in the proportion of mobile

compared to the decrease in the proportion of PC is a transition period for the

new normal. The huge growth potential of 2B cloud computing and YouTube and the

development of artificial intelligence are also far ahead of FB. The high-speed

revenue growth of the lunar exploration business also proves that Google's

innovation ability has continued to increase. Relying on the accumulation of

artificial intelligence and the lunar exploration business will rise one by one

in 3-5 years, Google can be regarded as a VC investment portfolio for a long

time, even if only one or two projects are successful, the market value can be

greatly increased in the future. We think 23x PE in 2017 is reasonable, with a

target price of $920 and a "buy" rating.

The Soul and Backbone of Google: Artificial Intelligence

Technology

Google's artificial intelligence business

covers everything from hardware to software, search algorithms, speech and

image recognition, translation, unmanned vehicle technology to medical and drug

research, and is the soul and backbone of the company. Here we sort out the

various businesses of Google AI.

1.

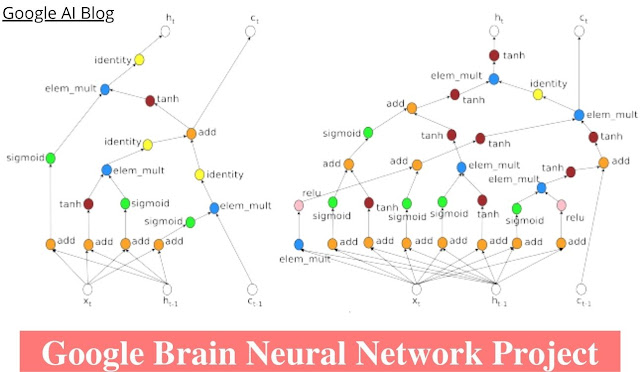

Google Brain Neural Network

Project

The project Google Brain, created in 2011,

is the cradle of many familiar projects, including TensorFlow, Word Embeddings,

Smart Reply, Deepdream, Inception, and Sequence-to-sequence. Deep learning is

critical to Google and is a very efficient tool for training computers to

recognize images and videos, giving them human-like capabilities in face

judgment, object recognition, and natural language analysis.

|

| Google Brain Neural Network Project |

2.

The second-generation machine

learning open-source platform: TensorFlow

In November 2015, Google announced to open source TensorFlow under the Apache 2.0 open source license. The operating principle of TensorFlow is to transmit the data represented by the structure tensor (Tensor) to the artificial intelligence neural network for analysis and processing. Its performance is up to 5 times faster than the first generation artificial intelligence system DistBelief. In April 2016, Google's DeepMind, which developed the AlphaGo Go robot, announced that all future research projects would use the TensorFlow platform.

|

| TensorFlow |

3. Google's latest search algorithm: RankBrain

RankBrain, an automated artificial

intelligence search system that was added to Google's search algorithm in

October 2015, has become the third most important part of the entire algorithm

in just a few months. The RankBrain system helps Google process search results

and provide relevant information, and can handle 15% of the previously

unhandled search requests in Google searches every day.

|

| Google RankBrain |

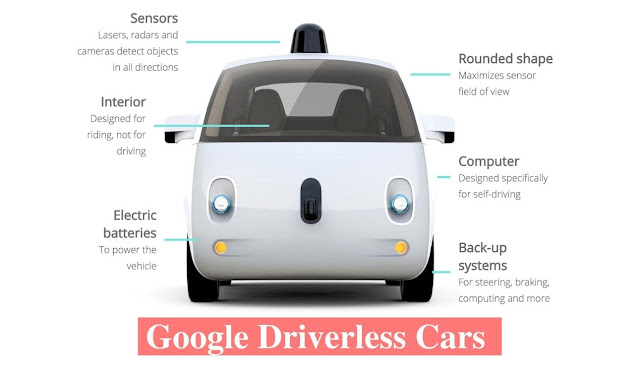

4.

Google Driverless Cars and

Google Drivers

At the end of 2014, Google proposed the

concept of a prototype of an unmanned vehicle without a steering wheel and

brakes. It is designed to be fully unmanned. The main components include a set

of LIDAR (Light Detection and Ranging) sensor computing systems composed of 64

laser units. The driverless car is powered by a navigation and map-scanning

system that costs between $75,000 and $85,000. The unmanned vehicle is equipped

with the Google Chauffeur artificial intelligence control system. When the

camera and the LIDAR sensing system scan the surrounding environment of the

vehicle and input it into the computer, the computer system judges the type of

the object according to its shape, size, movement form, and other

characteristics.

|

| Google Driverless Cars |

5.

The Combination of Machine

Learning and Machine Vision: Image Recognition

In 2013, Google announced that it would add

computer vision or machine vision (Computer Vision or Machine Vision) and

machine learning technology to its image search function. Users only need to

enter the name of the query item to get the corresponding photo search results.

The image recognition technology is based on the architecture of the

first-generation deep learning system DistBelief. The core technology is the

redesigned convolutional neural network and distributed learning. Compared with

the neural networks of other teams, the neural network architecture reduces the

parameter settings by more than 10 times.

|

| Image Recognition |

6.

Natural Language

Understanding Open Source Platform: SyntaxNet

In May 2016, Google open-sourced its

natural language understanding (NLU) platform SyntaxNet based on machine

learning platform TensorFlow and released Parsey McParseface, a training parser

(English Parser) program for English. SyntaxNet uses a deep neural network to

solve the problem of language ambiguity. After inputting the sentence to be

analyzed, SyntaxNet processes each word from left to right, and gradually adds

the dependencies between the analyzed words.

7.

Natural Sentence

Understanding and Machine Translation: Gmail/Inbox Smart Reply

Gmail/Inbox Smart Reply, launched in

November 2015, uses deep learning technology to write email replies. Gmail uses

machine learning technology to identify emails that require a user's response

and provides three suitable candidate response answers.

8.

The AI behind Allo’s Smart

Reply

In Allo, apps can also generate smart reply

options from the user's conversation transcript. The Allo team used a method

similar to the "encode-decode" two-step model, first using a

recurrent neural network to encode the dialogue sentence word by word to

generate the corresponding password (token). Then the password enters a

long-short term memory (LSTM) neural network to generate a continuous vector,

which is further passed through the softmax model to generate a discrete

semantic class (discretized semantic class). The next step for the Allo team is

to use a second recurrent neural network to pick the most appropriate response

from the set of selectable words.

9.

Google Translate: Machine

Translation Systems and Image Recognition

Google released Google Translate 10 years

ago, and the core algorithm behind it is Phrase-Based Machine Translation

(PBMT). The neural machine translation system (NMT) used by Google this time

treats the entire sentence as the basic input unit for translation.

|

| Google Translate |

10. DeepMind's

Deep Q-Network (DQN): imitating the experience playback of the human hippocampus

DeepMind published a paper "Deep

Reinforcement Learning at the Human Control Level" in Nature in February

2015, describing the deep neural network Deep Q-Network (DQN) is developed to

transform Deep Neural Networks ) combined with reinforcement learning

(Reinforcement Learning) deep reinforcement learning system (Deep Reinforcement

Learning System). Q-Network is a model-free reinforcement learning method,

which is often used to make optimal action selection decisions for finite

Markov decision processes.

11. DeepMind

launches text-to-speech system WaveNet

DeepMind also launched WaveNet, new

research in the field of computer speech synthesis. This is a text-to-speech

(TTS) system that uses a neural network system to model the raw audio waveform

(Raw SoundWave). DeepMind says the audio quality generated by WaveNet reduces

the gap between computer output audio and natural human speech by 50%,

surpassing all previous text-to-speech systems.

12. DeepMind's

medical exploration using image recognition technology

More than 3000 ophthalmic optical coherence

tomography (OCT) scans are performed every week at Moorfields Eye Hospital in

London, England. The hospital provides DeepMind with 1 million eye scan image

data of patients, as well as routine diagnosis and treatment measures.

13. Large-scale

machine learning for drug discovery

In February 2015, Google and Stanford

University jointly submitted a paper discussing "Large-scale Multitasking

Networks for Drug Discovery". Google is collaborating with a Stanford

University lab to explore how to use data from multiple sources to improve

accuracy in choosing which compounds are effective in treating diseases. Going

a step further, Google measured different amounts and types of biological data

from multiple disease treatments to improve the predictive accuracy of virtual

drug screening.

14.YouTube video thumbnails with counting machine vision

In October 2015, Google announced the

launch of a computer vision technology that uses deep neural networks in image

and video classification and recognition to bring better abbreviations to

YouTube. When choosing a video thumbnail, the program's "quality

model" scores the images, and Google now adds deep neural network

technology to this model.

15. The

ultimate solution for machine learning computing power: quantum computing

In May 2013, Google announced the official

establishment of its Quantum Artificial Intelligence Laboratory jointly

established with NASA's Ames Research Center and the Universities Space

Research Association (USRA). The lab, located at NASA's Moffett Federal

Airfield in Silicon Valley, California, houses a D-Wave 2 quantum computer that

Google bought from quantum computer maker D-Wave Systems. Google's goal is to

use the powerful computing power of quantum computers to fully explore

technologies in the fields of artificial intelligence and machine learning and

build better learning models for research in weather forecasting, disease

treatment, search algorithm improvement, and speech recognition. Quantum

computers can solve such problems more efficiently, that is, skip the local

optimal solution and directly find the optimal solution.

16. Self-developed

AI hardware: Tensor Processing Unit TPU

At present, Google's cloud platform already

has cloud machine learning, computer vision API, and language translation API,

so that all users who use Google's cloud computing platform can use the machine

learning system that Google has been using.

To support the operation of its cloud

platform, Google has designed a hardware device customized for artificial

intelligence operations, the Tensor Processing Unit (TPU) chip. The chip is an

integrated chip tailored for machine learning and TensorFlow built on an ASIC

chip.

No comments:

Post a Comment